A look at computational performance for an application running on an x86 CPU with Linux and Windows 95/98/NT, and how they compare.

Many individuals in the Linux community periodically express the belief that Linux “seems to be a faster environment” than Windows 95 and 98. However, it's difficult to find hard confirming evidence about Linux's speed, and little comparative performance data is readily available. As a result, many people considering adoption of Linux are probably unable to fully consider its performance in their decision. Of course, performance is only one of many factors that should be considered in evaluating an environment's suitability. For example, cost, reliability, usability and scalability are also important considerations.

In this article, we'll compare the computational performance for an application running under Linux and under Windows 95 and 98. We also examine if the application's performance under Linux can match performance under Windows NT. Last, we consider if the performance of Linux on the leading x86 CPU makes that environment a viable alternative to Linux on Intel.

We hope these test results provide some much-needed evidence of the potential for excellent computational performance in the Linux environment.

A number of measures have been used to compare CPU and system performance. Two well-known benchmarks for measuing raw CPU computational capability are SPECint and SPECfp. Overall system performance has been measured using a variety of techniques, such as POVray rendering (frame) rates, Quake frame rates, Business Winstone ratings, etc. The system performance measures are especially useful to determine how fast a particular system will be for an end user of canned applications. However, the system measures are not as useful for predicting performance of applications developed by the user. Most of the popular benchmarks tend to gloss over the joint impact of the operating system and compilation tools on execution time. Additional factors, such as the particular mix of computations, system hardware and compilation settings also impact end performance and are not isolated by most of the popular measures.

Therefore, our goal is to look at the impact of the operating system (and compilation tools) on computational performance. By isolating these factors, we can consider if Linux “measures up” to the performance of commercial Windows environments.

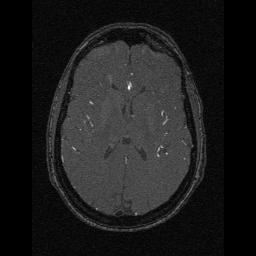

We tested the performance of a reasonably intensive C program which we developed and use in-house. (This program can be downloaded from ftp.linuxjournal.com/pub/lj/listings/issue67/3425.tgz.) The program implements a volume visualization technique on a medical data set. This application has moderate computational and memory requirements—the data set used in our tests is approximately 4.5MB in size and requires in excess of 300,000,000 arithmetic operations in C (most of which are floating-point calculations) to compute the visualization. (See Figure 1.) An application of this type is a reasonable test of the computational performance enabled by the operating systems and compilation tools.

Figure 1. Sample Slice of Data

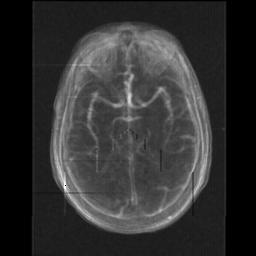

The visualization is static (no animation is involved), so we'll consider only the time to compute the visualization. The time to display the final image (see Figure 2) doesn't vary much between machines and invariably depends somewhat on the difference in graphics hardware, which is not one of the factors we wanted to consider. We also do not consider data input and output times; our goal is to see how efficient the operating systems and compilers are for computations.

Figure 2. Rendering of Data by Program

As much as possible, we wanted to concentrate in isolation on factors that would allow us to determine which combination of operating system and compilation tools produced the best performance. Luckily, our lab has a few dual-boot machines (i.e., multiple operating systems are installed on these machines and the desired OS can be selected upon boot-up). These machines are particularly useful for performance testing, because the same hardware is used for both Windows and Linux. However, to provide a larger set of data, we also looked at performance on several single-boot PCs.

During our tests, we ensured that no other non-OS tasks were running on the computers. Although there is some evidence that UNIX in general may exhibit more graceful degradation in performance under increasing system load than Windows 95/98, it is challenging to duplicate comparable loads across different machines, so we'll concentrate mostly on unloaded performance. The tests were conducted immediately following system reboot and the average of the first three runs immediately following reboot are reported. Computation time was determined using the standard C clock function which returns process CPU time (at least under Linux—later, we'll discuss a bug in the clock of many Windows environments). To ensure the most optimistic measure of time, we've launched the application using several mechanisms and reported only the best time. Sometimes an application under Windows runs fastest within a compilation development environment, but in other cases, an application will run fastest directly from a command-line prompt. We report whichever produced the fastest execution time.

The following computers were utilized for the tests:

PC 1: Pentium II/233MHz with 96 MB 66MHz SDRAM, 4.3GB Ultra DMA hard disk and dual-bootable to Windows NT or Red Hat Linux 5.2.

PC 2: Pentium II/400MHz with 128MB 100MHz SDRAM, 9GB Ultra DMA hard disk and dual-bootable to Windows NT or Red Hat Linux 5.2.

PC 3: AMD K6-2/300MHz with 64MB 100MHz SDRAM, 4.3GB Ultra DMA hard disk and dual-bootable to Windows 95 OSR 2 (with Ultra DMA disk drivers) or Red Hat Linux 5.2.

PC 4: Pentium II/350MHz with 128MB 100MHz SDRAM, 6.4GB Ultra DMA hard disk and Windows NT.

PC 5: Pentium II/350MHz with 128MB 100MHz SDRAM, 6.4GB Ultra DMA hard disk and Windows 98.

PC 6: Pentium II/450MHz with 128MB 100MHz SDRAM, 6.4GB Ultra DMA hard disk and Windows 98.

PC 7: Pentium II/400MHz with 256MB 100MHz SDRAM, 2 x 8GB Ultra DMA hard disks and Red Hat Linux 5.1.

PC 8: Pentium II/350MHz with 64MB 100MHz SDRAM, 4.3GB Ultra DMA hard disk and Windows NT.

PC 9: Pentium II/400MHz, identical hardware to PC 2 but bootable only to Windows NT.

Several compilers were used to build executables for testing. In the Windows environment, we used Microsoft Visual C/C++ Professional versions 4.0, 5.0 and 6.0. In addition, Metrowerks CodeWarrior Professional versions 3.0 and 4.0 were used. In the Linux environment, the GNU C compiler (gcc version 2.7.2.3) and the Pentium GNU C compiler (pgcc version 2.91.57, based on the experimental GNU compilation system egcs version 1.1) were used.

To ensure the most optimal performance for the application, we exhaustively tested execution time using a large number of combinations of compiler optimization options. In this article, the reported execution times reflect the most optimal combination of optimization options for each compiler. In addition, each test was run on multiple occasions to reduce the risk of background network or computer activity producing suboptimal results.

For the Windows system, we typically experienced the best performance immediately after reboot; thus, we conducted the tests upon reboot. The Windows 95 and Windows 98 machines typically executed 2 to 5% slower before reboot. On NT machines, the time differential was less; performance was between 0 and 2% slower before reboot. On the Linux systems, we did not experience any noticeable difference in execution times between freshly booted systems and systems that had not been rebooted recently.

The first tests compare performance of an application built and run in the Linux environment with that of one built in the NT environment.

Table 1 compares the application's execution times on dual-boot machines from gcc and pgcc compilation under Linux with the performance using Microsoft Visual C and Metrowerks CodeWarrior compilation under NT. The application executed with efficiency when compiled with Code Warrior 4.0 or pgcc. For the Pentium II/400 system, the application ran fastest when compiled using pgcc under Linux. Although the program ran next fastest when compiled with Code Warrior 4.0, the GNU C compiler produced a faster executable than Code Warrior 3.0. Code produced by Visual C executes considerably more slowly than code produced with the free Linux GNU tools or the commercial Metrowerks product. Based on the PII/400, Linux with pgcc allows approximately 2% faster execution than NT with Code Warrior 4.0. However, on the PII/233, NT with Code Warrior 4.0 was barely faster (0.5%) on a freshly booted system. It is interesting that the application ran 27 to 35% faster after being compiled under Linux with gcc than when it ran under NT with Visual C. The pgcc-compiled application ran 30 to 50% faster under Linux than the same application compiled under Visual C on NT. We also found it interesting that for the PII/233, better performance was achieved using Visual C version 4.0 than when Visual C version 6.0 was used.

On the PII/233, the Visual C-compiled binaries ran about 1% slower before reboot than after. For example, the Visual C 6.0 binary executed in 36.03 seconds on a system that had been up for several hours and in 35.62 seconds on a system that had been freshly booted.

Therefore, it appears that freeware compilation tools on Linux can produce code that runs, under general conditions, at least as fast and possibly up to 4% faster than code produced using a very efficient commercial compiler for NT. Clearly, Linux with gcc and pgcc can produce code that runs remarkably faster than code compiled using the Visual C tools under NT or 95.

We will report the most optimal execution times we could achieve. For the Visual C compiler, the fastest execution times were achieved using the maximize speed (/O2), multithread and Pentium code options in Release mode. The maximize speed option includes inline expansion, global optimization, intrinsic function generation, frame-pointer omission and several other optimizations. There was little overall difference in execution times for the three versions of Visual C, although Visual C 4.0 code tended to execute slightly faster than Visual C 5.0 or 6.0 on the majority of the machines in our test.

For the Metrowerks Code Warrior C compiler, the fastest executables were achieved using the “Maximize Speed” optimization for a Pentium II target. CodeWarrior allows the developer to select if MMX instructions should be generated when possible, but MMX instruction generation produced well under a 1% difference in our application's execution time. We've reported the fastest time we could achieve for a given computer; if the fastest time was with MMX instruction generation, that is what is reported.

Both Code Warrior and Visual C's clock functions do not conform to the C standard. Instead of returning the process' CPU time, these compilers' clock functions return the total CPU time for all active processes. Visual C provides a GetProcessTimes function in NT; however, that can be used to find the actual process CPU times. Our reported times from Visual C on NT are based on the output from the GetProcessTimes function. However, since we made sure no non-operating system processes were competing for the CPU during our tests, it was typical for the CPU times from GetProcessTimes to be only 0.1 seconds less than the time reported by clock. The clock function in gcc and pgcc conforms to the C standard. We also examined wall-clock time using C's time function and noticed that the times reported by clock were all within a second of time's output. time's resolution is in seconds, so this is the best result possible. Adding to our confidence that the application was consuming 99% or more of CPU resources while executing was a separate set of tests using WinTop, top and the NT task-monitor tools, which all consistently reported that our application was consuming 99% of the CPU resources.

For the GNU C (gcc) compiler, optimization level 2 (-O2) produced the fastest executables.

For the Pentium gcc (pgcc) compiler, optimization level 3 (-O3) with fast-math and function inlining (-ffast-math -finline-functions) gave the fastest execution times.

For comparison, we also ran the application on a single-boot Pentium II/400 (PC 7) running Red Hat Linux 5.1. The execution time using gcc was slightly better (14.67 seconds) on this machine than it was on PC 2. The application's execution time when compiled with pgcc was similar (13.79 seconds) to the time for PC 2.

We also tested our application's performance when run under the Windows 98 operating system. PC 4 and PC 5 have identical hardware components (e.g., the motherboards, memory, video cards, etc. are identical) except that PC 4 runs Windows NT and PC 5 runs Windows 98. Our application tends to run about 15% slower under Windows 98 than under NT. These results are summarized in Table 2. Therefore, although the application can run approximately as fast on an NT machine as under Linux (provided the program was compiled using CodeWarrior 4.0 for NT and the NT machine had been rebooted fairly recently), Linux appears to be decidedly more efficient than Windows 98.

We also ran the application on PC 6, a Pentium II/450 with Windows 98. Then, we applied linear regression to all of the execution times for the various compilers under all of the operating systems to extrapolate and interpolate prediction of the likely performance on newly booted systems across the Pentium line. Table 3 summarizes these results.

One question that has interested us for a long time is whether Linux on “clone” CPUs is a viable alternative. We have a dual-boot machine that has an AMD K6-2 CPU and decided to test its performance for applications run under Linux and Windows 95. The K6-2 microprocessor supports 3DNow! instructions that can enable faster performance for certain floating-point computations. However, most compilers cannot generate the 3DNow! instructions, so the 3DNow! capability is usually not fully exercised. In fact, to our knowledge, the Code Warrior C compiler is the only compiler that will attempt to generate 3DNow! instructions. (gcc and pgcc do not exercise the 3DNow! capabilities of the K6-2.)

Our machine (PC 3) has a 300MHz K6-2 CPU. The machine runs both Red Hat 5.2 Linux and Windows 95. Table 4 summarizes execution times on this machine when our application was compiled and run in the different environments. We tested execution times for Code Warrior binaries produced with and without 3DNow!-optimization, and found the 3DNow!-optimized program ran about 7% faster than the non-3DNow! optimized program. Otherwise, we found that using nearly the same compiler optimization settings we had used for the Intel machines produced the best K6-2 performance. The fastest execution time was achieved using pgcc with level 4 optimization. Since pgcc does not generate 3DNow! instructions, the x86 instructions it generates can be run faster under Linux than the best-optimized version can be executed under Windows 95.

Another way to look at our results is to compare the K6-2's performance under Windows and Linux with the performance of Pentium II machines. By applying the same linear regression approach we used above, we find that pgcc under Linux is the most efficient at exploiting the K6-2. Using Linux, our 300MHz K6-2 performs similarly to the predicted performance of a pgcc binary on a Pentium II clocked at 270MHz. That is, our K6-2/300 ran the application faster than a Pentium II/266 would be expected to run it under Linux. Table 5 shows the predicted clock speed of the Pentium II that would execute our application in comparable time using the various compilers. The Windows-based environments could not unlock the potential of the K6-2 as well as pgcc and Linux.

So, is the K6-2 a viable alternative to Pentium II, especially for Linux users? The answer would appear to be yes. Using pgcc in Linux seems to allow the K6-2 to perform like a Pentium II running at a clock speed that's about 10% slower than that of the K6-2. Considering the price differential between a K6-2 CPU and motherboard combination, Linux on the K6-2 can be attractive in terms of both price and performance.

The final experiment we conducted was to look at the total wall-clock (elapsed) time to execute multiple copies of our application simultaneously. This test is a measure of the scaling ability of Linux and NT and implicitly tests basic operating system efficiency at operations such as context switching and memory management, including cache management. We found that both Linux and NT scaled well over the tested range—up to 12 identical copies of the computations running at once. Under both Linux and NT, all the tasks began and ended computation in essentially the same pattern, and average computation time remained fairly stable.

On the NT side, we conducted one set of trials using applications compiled using Visual C 5 and two other sets of trials using applications compiled under Code Warrior 4. Two sets of trials using pgcc were also conducted. Table 6 summarizes the most strenuous tests, 12 jobs running at once. The results in the table for pgcc and Code Warrior reflect the mean results, although there was essentially no difference in measured times for the trials. It is interesting to note that under Linux, the average time per run for the 12 simultaneous executions was only 1.2% higher than the CPU time to run one job. Under NT, the average time per job increased 2.9% for the Visual C binaries and 7.5% for the Code Warrior 4.0 binaries. It is particularly interesting that on the tested machine, a Pentium II/233—which, according to our linear regression model, is one of the only machines where NT was predicted to execute the application faster than Linux would—the total time to execute 12 jobs at once under Linux is 5.5% less than the total time to execute the jobs under NT.

Does Linux scale well? These results suggest that Linux scales very well indeed and enjoys a performance advantage over NT.

Is Linux a viable alternative to Windows in terms of computational performance? Our results indicate that a substantial application compiled with freeware compilers under Linux can probably be expected to execute no more than 1% slower than when compiled and executed under one of the most efficient Windows NT commercial compilation systems. Under general usage (i.e., on a machine that hasn't been rebooted recently), it may be possible for an application to run up to 4% faster under Linux than under NT. Linux seems to scale very well, and under heavy loads, it appears to invariably outperform NT.

Our tests also show that Linux appears to allow approximately 15% better performance than Windows 98. The benefit of Linux is even more clear-cut when performance of applications compiled with pgcc and gcc is compared to performance when compiled with Visual C under Windows 95/98/NT.

Linux on non-Intel CPUs, such as the AMD K6-2, also appears to be a very viable alternative to Linux on Intel's Pentium II. Although reasonably sized applications can apparently execute faster on a Pentium II than on a comparably clocked K6-2 system, such applications will still run faster on the K6-2 with Linux than on the next lower clock grade Pentium II with Linux, i.e., Linux on a K6-2/400 would probably deliver marginally superior performance to Linux on a PII/350. Another way to look at the K6-2 performance is that using pgcc and Linux on a K6-2/300 would produce a program that ran only 2% slower than if it were built using Visual C and NT on a PII/400. Linux appears to be a very viable option on AMD.

For users who develop their own code, especially when that code involves a substantial amount of computation, Linux delivers outstanding performance.