One company takes the initiative and saves time and money using Linux and Beowulf cluster.

ImageLinks Inc., of Melbourne, Florida, processes large satellite and aerial images for commercial businesses. This job requires processing gigabytes of image data through computationally expensive algorithms for three-dimensional projections, image processing and complex data fusions. This article describes the conversion of ImageLinks to Linux and the resulting benefits.

In 1996, ImageLinks was allowed to commercialize previously classified government software. This consisted of over 5,000 source code files of object-oriented C++ code that was developed over a period of 15 years. The business was established on high-end SGI and Sun platforms with associated servers. We were leasing over a half-million dollar's worth of equipment, resulting in a monthly payment of over $15,000. Aside from the equipment, costs there were expensive proprietary-software licenses for compilers, tools and libraries. At the time, even memory upgrades had to be purchased through the vendor at a high cost so as not to invalidate our maintenance agreements.

Figure 1. Screen shot of the bWatch program of the ImageLinks Cluster. The red lines indicate communication between nodes and demonstrate that the process is CPU-bound.

Several of the technical staff were already using Linux on home machines, and we wondered what would be involved in porting our production software. After a discussion one day at lunch we decided to stop by the local computer parts store, purchased what we needed on the company credit card and started a backroom operation to port all of the code.

We installed Red Hat 5.2 and began the porting process. Over the next couple of months Dave Burken and Ken Melero passed the project back and forth, finding and correcting platform dependencies. At the time, the main roadblock was heavily templated code that the compiler didn't handle correctly. With the release and installation of Red Hat 6.0, the GCC compiler was able to handle the templates directly, and the porting effort was completed rapidly.

Our initial assumption was that a Linux port on Intel platforms would result in a much more cost-effective solution, but we didn't believe that the performance would match the higher-end workstations. Fortunately, we were wrong on the second assumption. The first indications that we would gain significant performance improvements on the Linux platforms were observed with the compilation times. A “make World” to compile all of the source code for our software would take anywhere from 10 to 12 hours on the SGI Indigo 2s. This same compilation was completed in less than two hours on a dual-processor Pentium box—even more telling was the size of the executables that was generated. The output from the gcc tools was generating executables that were approximately half the size of the proprietary compilers. This was an indication of the superior code optimization that was one of the many benefits of the open-source development tools. This performance was quite evident when we deployed a couple of test Linux machines into production. The most extreme example was cross-sensor image fusion runs.

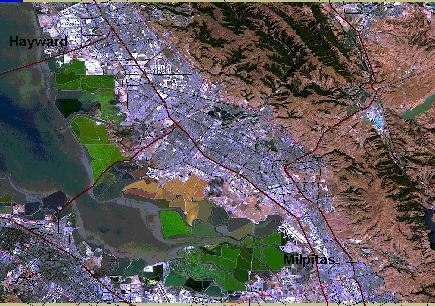

Cross-sensor fusion products are made by combining different classes of satellite images in order to create a new product. For example, we often combine high-resolution black-and-white imagery with low-resolution multispectral (color) imagery. The images are typically acquired from different points of view, at different resolutions, scales and times. All of these factors are taken into consideration as complex transformations are performed in three-dimensional space to project from the satellite image to an internal three-dimensional model of the space. Once this is performed, intelligent resamplers traverse the three-dimensional model to combine the pixels into the desired map projection and scale. This involves the processing of gigabytes of digital image data through complex image processing and three-dimensional transforms. It was not unusual for some of these production runs to take a weekend of processing on the proprietary workstations. With the Linux machines, we have observed almost an order of magnitude increase in performance on these fusions. This dramatic increase is due to the higher performance of commodity hardware coupled with optimized code from the software tools.

The next major benefit came from applying Beowulf clusters to our production runs. Beowulf clusters can be simply explained as a bunch of computers linked together with commodity networking for a cost-effective supercomputing solution. Most installations use Linux boxes with optimized kernels that are linked together with Ethernet communications on a local network. One node is designated as the master node controlling the scheduling of the slave nodes and all communications with the outside world. In the past, supercomputers required the software to be handcrafted for the specific architecture of the supercomputer. Recent advances in parallel libraries such as PVM and MPI have made this task much more generic. With these libraries the programmers can identify areas of the code that can be made parallel. The libraries then take care of the details of mapping it to the super computer architecture. Fortunately, our algorithms are extremely CPU-intensive and coarsely parallel. In other words, the codes are dominated by floating point mathematical computations, and the problems can be split into parts that don't require significant communication between the processors. Our implementation involved segmenting the imagery into tiles and passing them out to different boxes for processing.

We built a 14-node cluster, wired PVM into our code and observed linear scaling in performance as we added processors to the cluster. A trace of the execution shows that there are brief periods of communication data passing to the nodes, then the nodes spend a considerable amount of time performing the necessary calculations. This turns out to be an ideal situation for the application of clustering—to go faster and push more data we can just add more processors. With the Beowulf cluster in place, the complex cross-sensor fusion jobs dropped another order of magnitude. Jobs that took a weekend on the proprietary machines, which were reduced to hours on a single Linux machine, can now run in minutes through the Beowulf cluster.

In addition to performance and cost, also our business has witnessed additional benefits including improved stability, documentation and rapid software updates.

Mark Lucas is the chief technical officer of ImageLinks Inc. He is the founder of remotesensing.org, which promotes open-source development of remote sensing and geographical information systems software. He has a BS in Electrical Engineering, an MS of Computer Science and is a retired officer from the United States Air Force.

Figure 2. A section of a fused satellite image of Melbourne, Florida. This image was formed with the color from Landsat 5 combined with the 5m spatial resolution of the Indian IRS 1C satellite.

Figure 3. Composite image of the Milpitas California area. Multiple satellite images and vector layers are fused together to make the product.

Figure 4. The ImageLinks Beowulf cluster consists of 12 nodes, RAID disk drives, 100BT Ethernet Switch and power conditioning.

Figure 5. Four 650MHz Pentium III nodes with 384 Meg of memory in a 4U rack mounted unit.

Figure 6. Jeff Largent testing the nodes before they are mounted in the rack.