Some interesting intellectual property issues surfaced recently. With the settlement between Microsoft and TomTom, Martin Steigerwald thought it would be a good idea to replace VFAT as removable media's standard cross-platform filesystem under Linux. No sense risking a lawsuit from Microsoft, he felt. But, although there was some support for the idea, there doesn't seem to be an easy way to address VFAT's ubiquity. Most filesystem projects would like to gain as many users as possible, so starting a filesystem project whose goal is to migrate all of VFAT's users to it would be nothing new. Still, Martin's idea may find some adherents. Mark Williamson suggested as an alternative the possibility of a filesystem over USB, which would let each device use whatever on-disk filesystem it chose, while providing seamless communication between them, at least in theory.

It's not clear what, if anything, will come out of that debate; the modern patent system is truly broken. But Microsoft apparently has not bothered about VFAT in Linux yet, so maybe the kernel folks will decide to wait until something more threatening happens.

Meanwhile, Steven Rostedt has applied for a software patent, specifically so as to include his code in the kernel without fear that some other company might try to patent the algorithm and use it against the Open Source community. As it turns out, Andi Kleen thought at first that Steven had the standard evil motive and gave him a pretty good dressing down, saying that the kernel really should avoid using patented algorithms anywhere in the kernel. But, Steven explained the situation, and Alan Cox also encouraged people to patent their algorithms and release them for free to the Free Software world.

After staying with quilt for quite a while, Bartlomiej Zolnierkiewicz finally moved the IDE subsystem to a git repository. Apparently, the various IDE developers had been making noise about preferring git, and he finally gave in. git has been making steady inroads, although it's hard to make a real estimate of how many people in the world really use it. Commercial version control software has a number of features that git doesn't yet have, which seem to be high on the list of priorities for companies considering switching to git. One feature is the ability to check out only a portion of the repository. It's one of git's great powers—letting developers check out the entire tree and use version control on their local copy, but for some very large projects, that might not be economical or secure. Regardless, git works quite well for kernel development, and at least in the near term, it's unlikely to develop many complex features that don't directly relate to kernel development.

Greg Banks from SGI announced that some of its filesystem software would be coming out under the GPL version 2. SGI folks apparently had just taken a batch of code that seemed to have some useful parts and decided to quit their own work on it and just give it away to open-source people who could benefit from it. Some of the code was obviously useful, such as tools designed to put heavy loads on a given filesystem and detect certain types of corruption, but other code relies too much on internal SGI infrastructure and would have to be purged of all that before it really could be useful. In any case, Greg made it clear that SGI would not be supporting any of the code.

People always have been interested in doing more work with less effort. This drive reaches its peak when work is being done, even though you aren't actually doing anything. With Linux, you effectively can do this with the trio of programs at, batch and cron. So, now your computer can be busy getting productive work done, long after you've gone home. Most people have heard of cron. Fewer people have heard of at, and even fewer have heard of batch. Here, you'll find out what they can do for you, and the most common options for getting the most out of them.

at is actually a collection of utilities. The basic idea is that you can create queues of jobs to run on your machine at specified times. The time at runs your job is specified on the command line, and almost every time format known to man is accepted. The usual formats, like HH:MM or MM/DD/YY, are supported. The standard POSIX time format of [[CC]YY]MMDDhhmm[.SS] is also supported. You even can use words for special times, such as now, noon, midnight, teatime, today or tomorrow, among others. You also can do relative dates and times. For example, you could tell at to run your job at 7pm three days from now by using 7PM + 3 days.

at listens to the standard input for the commands to run, which you finish off with a Ctrl-D. You also can place all of the commands to run in a text file and tell at where to find it by using the command-line option -f filename. at uses the current directory at the point of invocation as the working directory.

By default, at dumps all of your jobs into one queue named a. But, you don't need to stay in that one little bucket. You can group your jobs into a number of queues quite easily. All you need to do is add the option -q x to the at command, where x is a letter. This means you can group your jobs into 52 queues (a–z and A–Z). This lets you use some organization in managing all your after-hours work. Queues with higher letters will run with a higher niceness. The special queue, =, is reserved for jobs currently running.

So, once you've submitted a bunch of jobs, how do you manage them? The command atq prints the list of your upcoming jobs. The output of the list is job ID, date, hour, queue and user name. If you've broken up your jobs into multiple queues, you can get the list of each queue individually by using the option -q x again. If you change your mind, you can delete a job from the queue by using the command atrm x, where x is the job ID.

Now, what happens if you don't want to overload your box? Using at, your scheduled job will run at the assigned time, regardless of what else may be happening. Ideally, you would want your scheduled jobs to run only when they won't interfere with other work. This is where the batch command comes in. batch behaves the same way at does, but it will run the job only once the system load drops below a certain value (usually 1.5). You can change this value when atd starts up. By using the command-line option -l xx, you can tell batch not to run unless the load is below the value xx. Also, batch defaults to putting your jobs into the queue b.

These tools are great for single runs of jobs, but what happens if you have a recurring job that needs to run on some sort of schedule? This is where our last command, cron, comes in. As a user, you actually don't run cron. You instead run the command crontab, which lets you edit the list of jobs that cron will run for you. Your crontab entries are lines containing a time specification and a command to execute. For example, you might have a backup program running at 1am each day:

0 1 * * * backup_prog

cron accepts a wide variety of time specifications. The fields available for your crontab entries include:

minute: 0–59

hour: 0–23

day of month: 1–31

month: 1–12

day of week: 0–7

You can use these fields and values directly, use groups of values separated by commas, use ranges of values or use an asterisk to represent any value. You also can use special values:

@reboot: run once, at startup

@yearly: run once a year (0 0 1 1 *)

@annually: same as @yearly

@monthly: run once a month (0 0 1 * *)

@weekly: run once a week (0 0 * * 0)

@daily: run once a day (0 0 * * *)

@midnight: same as @daily

@hourly: run once an hour (0 * * * *)

Now that you have these three utilities under your belt, you can schedule those backups to run automatically, or start a long compile after you've gone home, or make your machine use up any idle cycles. So, go out and get lots of work done, even when nobody is home.

In the November 2008 UpFront section, we published a little piece describing what “Cloud Computing” really meant. I'm a big fan of cool buzzwords, but for some reason, “The Cloud” is a term that always has seemed unnerving. Don't get me wrong; the concept is great. It's just that so many companies seem to be touting their new “cloud solution” to entice people with their hipness.

As much as I'd love to see the term change, I think it's here to stay. And, thanks to Linux, it's going to be quite a contender for replacing traditional server farms. Companies like Amazon with its EC2 or Google with its massive numbers of abstracted computer clusters are proving that Linux is the perfect way to throw serious computing muscle into large-scale clouds.

Once more, Linux is taking a huge market share and getting little praise for it. Linux is the premier choice for cloud computing providers, because it's affordable, scalable and, quite frankly, cheap. Now if only we can get a different name to stick—maybe S.H.A.W.N. (Superior Horsepower Abstracted Windows-lessly in a Network) computing? Yeah, probably not.

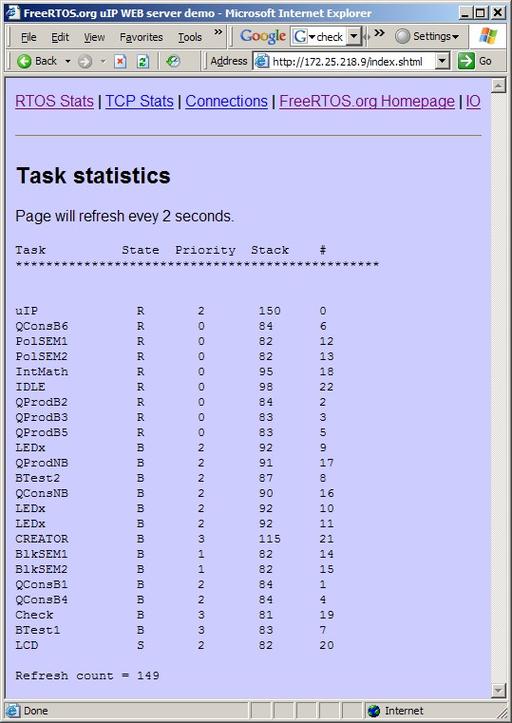

FreeRTOS is an open-source mini-real-time kernel with preemptive multitasking and coroutines. It provides queues for intertask communication, and binary semaphores, counting semaphores, recursive semaphores, mutexes and mutexes with priority inheritance for task synchronization. It can run with as little as 1K bytes of RAM and 4K bytes of ROM.

FreeRTOS has been ported to 19+ architectures that include 8-, 16- and 32-bit processors. The core code is mostly written in C and is compatible with most C compilers. The distribution comes with numerous samples for a number of different development boards. Many of the samples include an embedded Web server.

FreeRTOS is licensed under a modified GPL such that it can be used in commercial applications without the need to release your application source, as long as you don't modify the core FreeRTOS code. There also are commercially licensed versions and a version that has been certified for use in safety-critical applications.

FreeRTOS Task Stats (from www.freertos.org)

1. Millions of Netbooks shipped in first quarter 2009: 4.5

2. Percent increase in Netbook shipments from first quarter 2008: 700

3. Netbook shipments as a percent of all PC shipments in first quarter 2009: 8

4. Billions of PC systems shipped to date: 2.9

5. Billions of ARM processors shipped to date: 10

6. Median hourly wage (US/all occupations): $15.57

7. Median hourly wage (US/computer and mathematical science occupations): $34.26

8. Median hourly wage (US/health-care practitioner and technical occupations): $27.20

9. Median hourly wage (US/farming, fishing and forestry occupations): $9.34

10. Median hourly wage (US/food preparation and serving-related occupations): $8.59

11. Number of language front ends supported by GCC: 15

12. Number of back ends (processor architectures) supported by GCC: 53

13. Worldwide number of official government open-source policy initiatives: 275

14. Millions of active .com domain registrations: 80.5

15. Millions of active .org domain registrations: 12.2

16. Millions of active .net domain registrations: 7.6

17. Millions of active .info domain registrations: 5.1

18. Millions of inactive (deleted) .com domain registrations: 301.2

19. US National Debt as of 05/03/09, 7:36:12pm MST: $11,250,870,541,216.70

20. Change in the debt since last month's column: $115,410,006,992.80

1–3: IDC

4: Metrics 2.0

5: ARM Holdings

6–10: BLS (Bureau of Labor Statistics)

11, 12: Wikipedia

13–18: Domain Tools

19: www.brillig.com/debt_clock

20: Math

The Kindle 2 was Amazon's answer to concerns over the shortcomings of its immensely popular ebook reader. Version 2 improved on the form factor, usability and many other issues that annoyed users—everything except the screen size.

That's where the new model, the DX, comes into play. This Kindle boasts an 8.5 x 11" screen, which is conveniently the same size as standard US letter paper. The screen rotates, is rumored to have better contrast and, not to beat a dead horse, it's huge! Due to the size of the Kindle DX, sideways scrolling for things like PDF documents will be a thing of the past.

On the downside, the Kindle DX costs almost $500 and was released right on the heels of the Kindle 2. Those folks who just shelled out $359 for their shiny (almost) new book reader will be hard-pressed to spend anything, much less $489, on a device that adds only a larger screen that rotates and displays PDFs. But, if you were holding out because you thought the screen was too small, the Kindle DX might be just the ticket for you. Besides, doesn't we all need another Linux device in our lives?

This past May, I had the privilege of attending Penguicon 7.0 in Detroit, Michigan, as a “Nifty Guest”. It's not a big convention, with a little more than 1,000 attendees, and it's not even solely devoted to Linux. But, that seems to be part of the magic. Although at times it's a bit unnerving to see people walking around dressed in furry suits (Penguicon is also a science-fiction convention you see), the mix of people with varying levels of technical expertise is actually quite refreshing.

It's not terribly often a panel on Linux adoption on the desktop can be attended by both those familiar with using Linux and those just curious about it. The ability to bring those folks together so each can understand the other's viewpoint is truly invaluable. Add to that some of the geekiest guests, craziest entertainment and all-you-can-drink Monster energy drinks—Penguicon really sets itself apart as a conference that will bring you back year after year. As the entry fee is less than $50, making that yearly trek isn't even terribly painful on the pocketbook.

Are the smaller Linux conventions going to take over and grow while the larger ones start dwindling? I won't say it's going to happen, but I certainly wouldn't be surprised. As for myself, I'm going to try getting to the Ohio LinuxFest. If you look around, you'll probably find one close to you too. And, if not, consider starting one yourself!

Computer Science is no more about computers than astronomy is about telescopes.

—E. W. Dijkstra

Java is the single most important software asset we have ever acquired.

—Larry Ellison

I think there is a world market for maybe five computers.

—IBM Chairman Thomas Watson, 1943

The world potential market for copying machines is 5,000 at most.

—IBM to the founders of Xerox, 1959

Two years from now, spam will be solved.

—Bill Gates, World Economic Forum 2004

The nice thing about standards is that there are so many to choose from.

—Andrew S. Tannenbaum

Why is it drug addicts and computer aficionados are both called users?

—Clifford Stoll

Here are the all-time most-popular articles on LinuxJournal.com. Have you read them yet?

“Why Python?” by Eric Raymond: www.linuxjournal.com/article/3882

“Boot with GRUB” by Wayne Marshall: www.linuxjournal.com/article/4622

“Building a Call Center with LTSP and Soft Phones” by Michael George: www.linuxjournal.com/article/8165

“GNU/Linux DVD Player Review” by Jon Kent: www.linuxjournal.com/article/5644

“Python Programming for Beginners” by Jacek Artymiak: www.linuxjournal.com/article/3946

“Chapter 16: Ubuntu and Your iPod” by Rickford Grant (excerpt from Ubuntu Linux for Non-Geeks: A Pain-Free, Project-Based, Get-Things-Done Guidebook): www.linuxjournal.com/article/9266

“Monitoring Hard Disks with SMART” by Bruce Allen: www.linuxjournal.com/article/6983

The Ultimate Distro by Glyn Moody: www.linuxjournal.com/node/1000150

“Streaming MPEG-4 with Linux” by Donald Szeto: www.linuxjournal.com/article/6720

“The Ultimate Linux/Windows System” by Kevin Farnham: www.linuxjournal.com/article/8761