What's better than a lab full of thin clients? Ten labs full of thin clients! This article shows how to scale LTSP. If you think more is better, read on!

My last two articles have focused on Linux thin clients. I've covered how to set them up, how to administer them and even how best to tweak your server to meet your needs. This article finishes the series by describing how to scale LTSP in large environments. There are a few different methods, and each has its advantages and disadvantages.

One of the options for a large LTSP rollout is simply not to scale at all. This may seem like a cop-out, but since LTSP works so well in a classroom (or similar) environment, simply adding a second network card to your workstation class computers is a simple way to serve 4–5 thin clients per classroom. In fact, if your network infrastructure is old and can't support the large bandwidth requirements thin clients demand, this type of setup is perfect. The high bandwidth is managed by a cheap desktop switch, and the only traffic on your main building network is for Internet and file sharing.

When you're setting up your network in this way, it's important to realize the thin clients in one classroom won't be able to see the thin clients in another classroom. Since every classroom has its own server, every server is setting up its own NAT for the thin clients to live. If you plan to do something fancy like sharing a printer connected to a thin client, keep in mind only the server will be visible to the rest of the network. (You still can get around this limitation by sharing the thin-client-connected printer via the classroom server. Linux is incredibly flexible!)

If a classroom-server-type setup sounds ideal for you, setting it up is as simple as configuring the classroom workstation (often the “teacher station”) as an LTSP server. It still will function perfectly fine as a workstation, but because it will be running the LTSP services in the background, it will share its resources with the thin clients connected to the second Ethernet card. Depending on the speed of the workstation, and whether your thin clients are running local apps, a mid-range workstation can support anywhere from 3–8 computers. Remember, running Firefox and Flash as local apps will really take a huge burden off the teacher's workstation.

Oddly enough, the single NIC setup is the toughest to set up, the most difficult to load balance, and the hardest to update, but it's the system I use personally. I suspect it's because this used to be the only way to scale, and I'm comfortable, so I'm reluctant to change. I know, it's a pitiful excuse, but a truthful one. Basically, in this scenario, you have any number of servers running LTSP in your server closet, using only a single network interface to connect to the network. One of the servers, or more commonly a separate DHCP server, tells the clients which server to connect to. I usually chop up the network by location or purpose, and point similar computers at a common server.

Because multiple DHCP servers on a network can be messy, I recommend using a separate computer for DHCP and disabling the service on each LTSP service. You can copy the dhcpd.conf file from one of the LTSP servers and tweak it to serve your needs. You can leave a global directive to point all servers to a single LTSP server, and then manually add your “chunks” of clients one by one. Here's a snippet of my dhcpd.conf file, specifying a group of clients to boot to a specific server:

# LTSP Server 1 clients

group {

next-server 192.168.1.200; #Server 1's IP address

filename "/ltsp/i386/pxelinux.0";

# Hosts

host client1 { hardware ethernet 00:90:c2:cd:85:60;

↪fixed-address 192.168.1.101; }

host client2 { hardware ethernet 00:16:cb:bc:ad:37;

↪fixed-address 192.168.1.102; }

}

Remember to base your dhcpd.conf file on a file from an LTSP server, so you get all the pertinent thin-client options in the global section of the conf file.

In the above section of the conf file, you'll notice the next-server statement. That tells the thin client where to go for its TFTP boot file. The filename directive tells the client what boot file to download from the TFTP server. It is possible to host the PXE boot files on a central server, and then point the thin clients to another server for the rest of the boot process, but it gets complicated really quickly. I find it's much easier to hand the thin client off to the LTSP server right inside the dhcpd.conf file. It means each LTSP server must be running TFTPD, but since each server is already waiting for thin clients to connect, it's not a big deal.

If you do the DHCP configuration/trickery above, configuring each LTSP server is pretty much the same as the standard server model. You still need to create the chroot, and you still need to run ltsp-update-image anytime you make a change to the chroot, and any changes you make must be done to each and every LTSP server on your network. It isn't an efficient way to manage a network, but it's conceptually very simple and easy to troubleshoot when something goes wrong. The big downside is replicating changes to every server and updating each chroot. If you're thinking there must be a better way, well, you're right!

A few years ago, some of the brilliant folks working on the LTSP Project (Stéphane Graber was the main hero here) realized that NBD was so efficient at serving the chroot image, it really wouldn't be a bottleneck to use a single NBD chroot image and spread the actual workload of running applications across a cluster of servers. As with most great ideas, this introduced a bunch of problems, but for the most part, these problems have been addressed, and LTSP Cluster is a viable, easily scalable way to implement a large LTSP install.

There is a Web site for LTSP Cluster (www.ltsp-cluster.org), but to be honest, it's not the most up-to-date site from which to learn. The best walk-through I've been able to find is on the Ubuntu site: https://help.ubuntu.com/community/UbuntuLTSP/LTSP-Cluster. Following the directions on that site will get you a fully functional cluster just waiting to be tweaked. For the purpose of this article, I'll explain what happens in a cluster environment, so hopefully the concept makes sense. Because cluster support is arguably the least-developed aspect of LTSP, you might decide it's not worth the effort.

When running LTSP Cluster, you must dedicate a server (or virtual machine) as the NBD server for the entire network. You also can create a highly available scenario with fancy DHCP/DNS magic, but for most scenarios, a single NBD server is the way to go. The chroot environment is very similar to the traditional LTSP model, but when running the ltsp-build-client script, you have to add the --ltsp-cluster flag so the clustering options are built in.

Once the NBD image is created, separate servers, referred to as “application servers”, are added to the cluster configuration. When a client boots up, the NBD server queries the attached application servers, determines which server is least taxed and assigns the thin client to that server. From that point on, until reboot, the client runs its applications via SSH from the application server it was assigned. It still is possible (and recommended) to use local apps in order to utilize the power of the thin-client device.

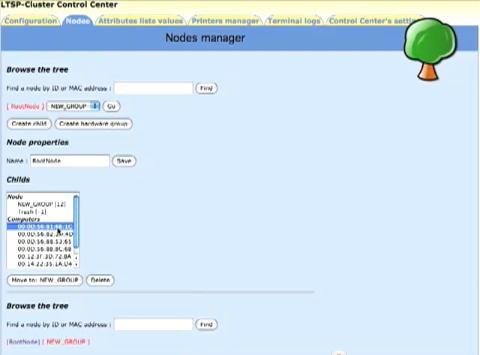

The main configuration difference with LTSP Cluster is that the lts.conf file has been replaced with a Postgres database and a Web interface. Once you get the hang of using the slightly confusing interface, you'll find it is a powerful way to organize configurations based on individual client machines (Figure 1). I also created a short video a couple years ago, demonstrating how to add nodes in the Web interface, because it can be a bit daunting at first glance: youtu.be/7QdYW-NT_sw.

Figure 1. The Web interface is confusing, but useful.

LTSP Cluster is truly the most advanced way to scale your thin-client environment. Because it does add a couple layers of complexity to your roll-out, I recommend that before you implement Cluster, you at least are familiar with how LTSP works with its traditional installation method. In my experience, when LTSP Cluster works, it works very well. When something goes wrong, troubleshooting is tougher than with a traditional setup. As with most things, your mileage may vary.

Finally, there is arguably the least-server-intensive model for implementing thin clients, and that is not to use thin clients. Yes, yes, my mastery of the obvious is astounding, but seriously, LTSP supports a “fat-client” model. Basically, rather than depending on the LTSP server for running applications, the NBD chroot image contains a complete Linux install, so every application, even down to the window manager, is run from the client. In this model, all the server is responsible for is serving out the NBD image. To enable the fat-client mode, you need to add the --fat-client flag when running ltsp-build-client to create your chroot.

As with every scenario, this has its shortcomings. Because absolutely everything is run from the client, the fat-client model requires powerful machines. In fact, the specs for a fat client are similar to that of a standalone computer, with the exception of a hard drive. The fat clients require a substantial network connection to the server, and unless you have a very fast network infrastructure, you might be better off with actual standalone computers.

If you have a bunch of workstations without hard drives, or if the maintenance time saved by having a single chroot environment for all your workstations is worth it, fat clients can be incredibly useful. I've noticed, however, that even top-end Atom-based thin-client devices tend to bog down in fat-client mode. If you're going to depend on your clients to run the complete OS, it's important to test them first to make sure they're up to snuff.

Because you may have a combination of new and old client machines, it is also possible to mix and match the fat-client and thin-client model from the same server. It means creating two NBD images, but assigning the proper image is as simple as specifying a new filename in your dhcpd.conf file. This is a nice way to take advantage of a few really powerful workstations without making your slower hardware obsolete.

My last few articles have torn the LTSP system apart and looked at its juicy insides. Perhaps that's a gross metaphor, but hopefully, it's a little more clear how powerful thin clients can be for your organization. My favorite part is how many different ways LTSP can be implemented. If you're just starting the process, a few classroom labs are the perfect way to see if LTSP will work for you. In fact, by recycling older workstations and using them as thin clients, the cost of implementation is often zero. It just takes a few adventurous users willing to learn a new way to compute.

As the system administrator, you have several ways to set up your LTSP system. Table 1 gives some pros and cons of each scaling method discussed here, but if you're just starting, I recommend going with a single server and a handful of clients. Once you get to the point of scaling, you'll have a much better idea of what fits into your environment best. If you have questions along the way, feel free to drop me an e-mail or ask the professionals in the IRC #ltsp channel on Freenode.

Table 1. Scaling Models, the Pros and Cons

| Model | Description | Best for | Advantages | Disadvantages |

|---|---|---|---|---|

| Classroom Server | Workstation class machines with extra Ethernet card server, 3–8 clients per classroom. | Environments with poor network infrastructure and/or lack of big servers. | Minimal server costs, utilizes existing workstations as mini-servers, keeps thin-client bandwidth off main network. | Classroom thin clients isolated from each other, potential for double-NAT problems, more servers means more configurations to manage. |

| Single Ethernet | Centralized servers have a single Ethernet port, with a separate DHCP server allocating clients to servers. | Large network installations with sufficient network infrastructure. | Troubleshooting is simple, clients can be reassigned by modifying dhcpd.conf file, configuration is similar to standalone model. | Manual load balancing is cumbersome and often incorrectly done, multiple servers to configure means repeating the same admin tasks over and over, no automatic failover if server goes down. |

| LTSP Cluster | Centralized NBD server load balances over any number of application servers. | Large installations with sufficient network infrastructure and experienced LTSP administrator. | Clustering allows for central configuration of all clients and single chroot for quick updates. | Web interface slightly cumbersome, layers of complexity make troubleshooting more difficult, NBD server failure can take down entire network. |

| LTSP Fat Client | Powerful client machines load entire operating system over NBD, running all applications locally. | Powerful client machines with sufficient network infrastructure. | Client machines handle all computing, so server requirements are minimal. | Requires quite powerful client machines and solid network infrastructure. Standalone hard drive installs should be considered. |